Understanding Model Context Protocol (MCP) - Standardized AI Agent Integration

Technical analysis of MCP architecture, implementation patterns, and practical applications

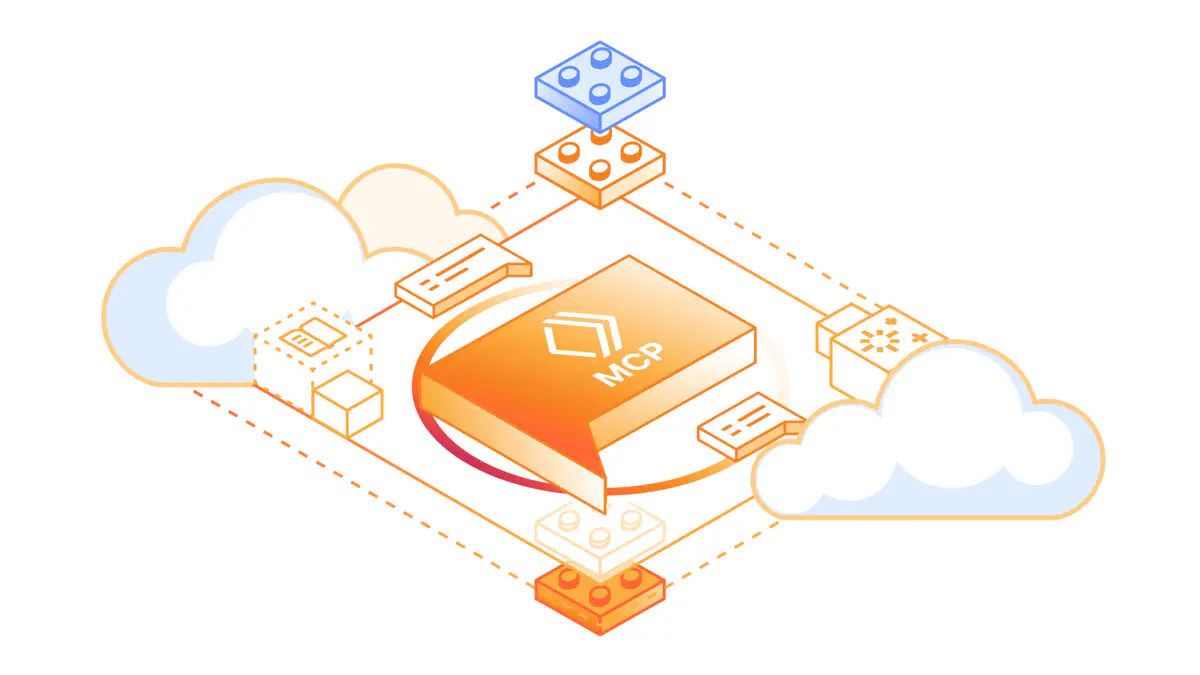

Model Context Protocol Architecture

Model Context Protocol ArchitectureUnderstanding Model Context Protocol (MCP): Technical Architecture and Implementation

Over the past year, I’ve been digging into how AI systems are moving from being “smart chatbots” to becoming autonomous agents that can actually complete tasks. A big enabler of this shift is the Model Context Protocol (MCP) — a standard way for agents to talk to external services and, importantly, to each other.

This post breaks down MCP in simple terms, why it matters, and how it looks in practice with some technical examples.

From Chatbots to AI Agents

Where LLMs Stop

Large language models (LLMs) like GPT, Claude, or Gemini are great at generating text, but they’re passive. If you ask, “Book me a flight tomorrow,” they’ll give you instructions, not an actual booking. They can’t take direct action on APIs, databases, or services by themselves.

What Agents Add

Agents fill this gap by combining LLM reasoning with tools and memory:

- Tools: Functions to interact with APIs (e.g., flight booking, databases)

- Memory: Persist preferences and state across tasks

- Workflow orchestration: Break tasks into steps and complete them end-to-end

- Error handling: Retry when things fail instead of stopping

In short, agents move from “answering” to actually doing.

The Pain: Messy Integrations

Before MCP, connecting agents to services was a mess. Each API had its own quirks:

- Different authentication (API keys, OAuth)

- Different endpoints (

/flights-listvs/listFlights) - Different response formats

So if you wanted an agent that worked with GitHub, Jira, Slack, and AWS, you had to write a patchwork of adapters. The more services you added, the more brittle it got.

Here’s a simple example of the old approach:

class DevOpsAgent:

def __init__(self):

self.github_adapter = GitHubAPIAdapter()

self.jira_adapter = JiraAPIAdapter()

self.slack_adapter = SlackAPIAdapter()

def deploy_feature(self, branch_name, ticket_id):

github_status = self.github_adapter.get_pr_status(branch_name)

jira_details = self.jira_adapter.get_ticket_info(ticket_id)

slack_approval = self.slack_adapter.request_approval(branch_name)

return self.coordinate_deployment(github_status, jira_details, slack_approval)

This works, but it scales badly. Every new service means more custom glue code.

Enter MCP: Standardizing the Middle Layer

MCP, introduced by Anthropic and now open-source, tackles this problem by defining a common protocol for agents and services to talk.

Instead of writing one-off integrations, an agent can connect to any MCP-compatible service using the same structure. Think of it like HTTP for AI agents: once you support the protocol, you can connect to anything that speaks it.

Core Components

- MCP Servers: Wrap a service (e.g., GitHub, Postgres, Stripe) and expose capabilities like “create_pull_request” or “query_database.” They handle auth, API quirks, and error recovery.

- MCP Clients: Live inside agents and connect to servers. They discover capabilities and forward tool calls.

- Protocol: Uses JSON-RPC 2.0 over stdio or WebSocket. Handles bidirectional communication, errors, and structured requests.

Example Configuration

{

"mcpServers": {

"github-integration": {

"command": "python",

"args": ["./servers/github/server.py"],

"env": { "GITHUB_TOKEN": "${GITHUB_TOKEN}" }

},

"database-connector": {

"command": "node",

"args": ["./servers/postgres/index.js", "--connection-string", "${DB_CONNECTION}"],

"capabilities": ["read", "write", "query"]

}

}

}

Once connected, the agent doesn’t need to care about how GitHub or Postgres works internally — it just calls the standardized tools.

How It Plays Out in Code

Here’s what a simplified server and client might look like:

Server (GitHub example)

from mcp import Server, Tool

class GitHubMCPServer(Server):

def __init__(self):

super().__init__("github-integration")

@Tool("create_pull_request")

async def create_pull_request(self, repo: str, title: str, branch: str):

# Simplified logic — real implementation calls GitHub API

return {"pr_number": 42, "status": "created"}

Client (Agent side)

from mcp.client import MCPClient

class DevAgent:

def __init__(self):

self.mcp = MCPClient()

async def init(self):

self.github = await self.mcp.connect("github-integration")

async def deploy_feature(self, repo, branch):

pr = await self.github.create_pull_request(repo, f"Deploy {branch}", branch)

return pr

Multi-Agent Workflows: Agent-to-Agent Protocol

MCP makes it easy to connect agents to services, but what about agents connecting to each other?

That’s where the Agent-to-Agent protocol (A2A) comes in, developed by Google. It standardizes how agents discover each other’s capabilities and delegate tasks.

For example:

- A flight agent specializes in finding and booking flights.

- A hotel agent specializes in hotel reservations.

- With A2A, the flight agent can ask the hotel agent, “Can you book hotels?” and pass it the request. Each stays specialized, but they cooperate.

This avoids bloated “do everything” agents and enables modular systems.

Real-World Applications

Some areas where I see MCP and A2A being useful:

- Development automation: Agents can handle PRs, run tests, and notify teams without brittle integrations.

- Ops monitoring: Agents correlate metrics from AWS, Datadog, and Elasticsearch through MCP servers.

- Data pipelines: One agent queries Stripe transactions, another queries BigQuery, and they combine findings.

- QA automation: An agent deploys to staging, runs unit + integration tests, and rolls back if failures occur.

Why MCP Feels Important

MCP solves a very real developer problem: the endless glue code needed to wire agents into different systems. By providing a standard interface, it:

- Simplifies integrations

- Makes agents more portable

- Encourages modular design

- Reduces duplication across teams

In many ways, it feels like the early days of HTTP or ODBC: a protocol that doesn’t make headlines but quietly becomes the backbone of a new ecosystem.

Closing Thoughts

MCP addresses common integration challenges in AI agent development by providing standardized communication patterns and service discovery mechanisms. Its design emphasizes:

Technical Benefits

- Consistent integration across services

- Modular architecture

- Built-in error handling and retries

- Efficient protocol design

Implementation Considerations

- Security: Auth, RBAC, and data privacy

- Performance: Connection pooling and caching

- Monitoring: Observability for debugging and optimization

- Reliability: Circuit breaker patterns and graceful degradation

MCP is still maturing, but it feels like one of those quiet, foundational protocols — similar to HTTP or ODBC — that will make AI agent systems more maintainable and interoperable in the long run.

References & Further Reading

- Anthropic MCP Documentation

- Model Context Protocol GitHub Organization

- Anthropic Announcement: MCP Launch

- The Verge Coverage: Anthropic Launch

- GitHub Docs: About MCP in Copilot

- Axios Analysis: Open Source MCP

- arXiv: MCP Security Risks and MCPSafetyScanner

- arXiv: MCP-Universe Benchmark

- arXiv: Survey on MCP and Future Research

- arXiv: MCP Bridge for Resource-Constrained Environments

- Microsoft Integration with Windows AI Foundry